The Death Of The PC As We Know It

Times are changing. Our society isn't exactly sure

what the outcome will be, but the writing is on the wall. It has been recently

announced that the PC is experiencing the longest decline in sales since… well,

ever.

Let’s

zoom in on that last bit shall we...

|

|

Ouch... Things didn't improve for very long after 2011.

|

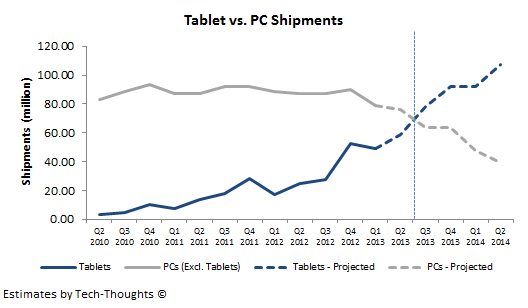

Our smartphones and tablets are taking over. You

know they’re powerful, but HOW powerful exactly? The results may shock you!

Consider this:

A high end 2013 smartphone has 3.35 times the performance*

of a 2007 Desktop PC CPU, 1.5 times the performance of a 2011 Desktop PC CPU

and can manage 12.5% more performance than an Xbox 360. Umm.. WHAT?! Most people

don’t even realise that there is a

mobile ‘revolution’ happening right under our noses. More exact details are in

the main article.

*performance as a measure of floating instructions

per second.

Now that I’ve got your attention...

Now that I’ve got your attention...

In order to understand the grand scale of this

‘revolution’, let’s take a look back. The computer is possibly man’s greatest invention, after

the ability to control the electrical current.

Here’s a brief but rather interesting overview of the origins of the computer

and its history The computer’s exact time of arrival into this world depends on

your own definition but it goes something like this:

ORIGINS

1812 | Charles Babbage had a vision for a general-purpose machine. The idea came to light when he notice a vast number of mistakes in a table of logarithms. Babbage realised that the work could be done by a machine much fast and more reliably than an any human.

1930| Massachusetts Institute of Technology (MIT) created a machine that could solve differential equations. A few years passed and scientists realised they could exploit the binary number system for use in electrical devices.

1938 | A German mathematician, Konrad Zuse, invents the "Z1". It had the makings of a modern computer, including a processor unit, memory and a binary number system in its operation.

1943 | America steps in with a 5 tonne calculator at Harvard University that caught the eye of International Business Machines (IBM).

Later that year, the famous 'Colossus' computer was born. It was the first programmable computer and arguably helped the Allies win WWII via its ability to decipher enemy codes. It was a fatty though, at about 1 tonne. It had the awesome processing power of 1500 vacuum tubes.

1951 | The Universal Automatic Computer (UNIVAC) becomes the worlds first commercial computer. One of its first tasks was to predict the 1952 U.S presidential election outcome.

|

| Above: Colossus computer running Battle Field 4 |

MODERN COMPUTING

1960’s

The Apollo 11 guidance computer, that helped take Man

to the moon, did so with just 2kb of memory and 32kb of storage, with an

incredible CPU speed of 1.024MHZ. Even though it was a powerhouse for its day,

let’s put this into context.

In 2013, a mobile phone can have not one, but four

CPU’s clocked at 2.3 GHZ (Snapdragon 800). That’s 2,246x times faster than the Apollo 11

guidance computer (not including multitasking which was done manually in 1969).

Let’s keep going. Modern phones can have up to 3 GB of RAM (that’s 1,572,864x

times as much!) with 64GB of storage expandable up to a possible 96GB (that’s 3,146,875x

times as much!!) Think about the next

time you see someone Google “kitty cat memes” on their phone!

Also in the 1960’s, one man was about 25 years

ahead of his time. His name was Douglas C. Engelbart. On December 9th,

1968 in San Francisco, the world was about to witness one of the greatest

visionaries of all time. While hippies were frolicking outside on Haight-Ashbury, Engelbart gave

a tech demo dubbed “The Mother Of All Demos”. It featured word editing,

hyperlinks, video conferencing, text and graphics, a mouse… It really was just

amazing for its time. Sadly Douglas Engelbart passed away on July 2nd.

Let his work live on...

1970’s

A great revolution is set in motion by the

introduction of the Intel 4004 chip in 1971 and the computer makes its way into

our homes. At the spearhead of this movement was the Apple II. Apple would have

forever been a fruit if it wasn’t for a little software package called

VisiCalc. It turned the cooperate industry on its head, no longer was the PC

just for hobbyists but it was now a real business tool. When accountants saw

VisiCalc, they literally broke down and cried. Their lives were changed because

all of a sudden, what previously took them countless hours of painstaking work

could now be done in seconds. The computer had become personal.

|

| Above: Behold. VisiCalc. |

|

| Above: Steve Jobs and Steve Wozniak having some classic laughs with an apple II |

USER INTERFACE AGE

1980’s

It’s all about

being the fastest and the most powerful. Primitive graphics and user interfaces

first start showing up in 1981.You no longer had to be scared of the computer anymore

since the mouse and pointer system made it child’s play. Gone were the days of

typing instructions on a green screen. “You don’t even need to know how to

program to use one” well that’s the world Steve Jobs wanted anyway. IBM and the

big corporate side didn’t see the light however, they just dismissed the format

as “cute”. Meanwhile, in the home, the most dominant computer of the 80’s was

the Commodore 64. Strangely enough, the British had a huge computer market compared

to today. In the 80’s. In 1981, the British company Acorn Computers conceived

the ARM chipset. Boom. Today ARM chips power 98% of mobile devices!

|

| Above: Bill Cosby likes the Commodore 64, it must be good. |

|

| Above: Some cool cat's playing with a Commodore PET in 1983. |

INTERNET AGE

1990’s

By the 90’s, computers began to vastly improve in

their capabilities and the PC exploded into just about every western home and

became more capable with help from a little thing called the World Wide Web. In

1996, two guys casually set up a small research project that would later grow

to take over the world. Those two guys were Larry Page and Sergey Brin,

and that project was ‘Google’. Windows was dominant with Windows 95 and 98 capturing

the new concept of the “information highway” allowing absolute noobs to get

online easily.

|

| Above: Bill Gates wearing all blue. A colour that he also liked to use in his error screens. |

2000’s

Now the whole world suddenly became smaller as emails

and file sharing became common place. For the first time ever, you could see

what the world was doing and instantly interact to it. We saw the rise of

Youtube, Twitter, Myspace, Facebook, Tumblr, Wikipedia…etc. The world suddenly

was full of worldwide interaction and information, and our PC’s were the only

real gateway. Until 2007. On January 9th, 2007,

one moment changed the world. During a Key Note, Steve Jobs calmly pulled a

small glass slab out of his pocket. He touched the screen with his finger and

flicked downwards with through a list of music. The crowd was shocked, they

could do nothing but gasp and cheer. No one had ever seen the human-technology

barrier broken so clearly; no more buttons, remotes, things to get in the way.

You can now interact with your machine physically.

This was of course, the introduction of the iPhone.

The iPhone’s slogan was “This is only the beginning”. You can’t argue with that...

But of course Android would later come along and beat its ass..

MOBILE AGE

In the 2010’s the landscape began to change. This

is where we really begin our story.

I

want you to shift your mind, just for the duration of what remains in this

article. You can put it back when you’re done reading- just bear with me.

I want to

propose an idea. What if I told you that the smartphone and tablet, those

things that you take for granted every day, were the very early form, the

infant stages - the next iteration (or continuation) of the PC.

“HERESY!!”, you scream. It can’t run Crysis 4, it can’t do video editing, you

can’t write code on it! It’s just a toy. It's forgivable to still see tablets

as those annoying things which SHOULD be able to do everything but somehow,

they aren’t QUIET hitting the spot. You'd be partially correct but not entirely

correct. Let me break it down for you:

There are two main groups of PC users: Content CREATORS

and content CONSUMERS Most people have used a computer but have never written a

line of code. I make videos, produce music, use word processors and have used

some specialist engineering software. If it wasn't for these tasks (and a

select few others), I could do almost everything else in my daily routine on my

smartphone, right now.

This is where the content CONSUMERS come in. The

content consumer demographic usually consists of people that are scared of/

don’t care about technology and are “casual users”. All those viral videos of kitty cat’s, looking up recipes of cakes, Facebook,

Twitter, browsing articles or news stories… I hate to admit it, but this

population of PC user dominates. Why else do you think the iPad and other

tablets are set to overtake PC sales?

People simply no longer need a netbook, and (perhaps) soon will not need an ultrabook to do most of this stuff. For $120 you can by a Tegra 3 Quadcore tablet that can do 80% of the stuff you need as a content consumer. Let’s put the stakes up a bit - what if we have a top-of-the-line smart phone? Can we account for the content creators? Assume we couple the device with an efficient dock that takes 4 USB ports, card readers, full size Blutooth keyboards and mice, output’s 5.1 surround sound and plug it all into a 30” monitor and you’ll have something like this:

|

| Above: Smartphone acting as a computer running Ubuntu. |

The concept is already here today. The only thing

missing is the software and apps. Today the software side is simply NOT up to

scratch, the gap between PC and mobile devices will only narrow as app

developers begin to realise this and really cash in on “killer apps.” A killer

app for example, may be a full version of Microsoft office with no compromises

for the mobile space. A killer operating system may even have to be someone’s

brainchild. It’s coming, but we still have to compromise for now.

Speaking

of compromises, a lot of the technologically literate readers out there are

probably poised over your keyboards ready to fire off a comment about the CPU

architecture. Yes, this is the one thing holding us back from TRULY being in a

post-PC era today. Let me break it down once again. 98% of mobile devices use

ARM architecture (remember that small British Acorn company in the 80’s from

above… yeah). ARM processors are insanely power efficient but do not allow the

same apps that run on say, Windows or Mac OS These apps just simply don’t readily run without severe modifications. Desktop PC operating system platforms use X86 architecture which is a world away from ARM. The only way I could see the two worlds coming together is if chip manufacturers such as AMD and Intel step up to the plate and produce ultra-low power, high performance X86 chips in the coming decades. Or if the apps developed on ARM portable devices become so capable that the need for X86 chips vanish all together (once again, decades away). The bottom line is that ultra-portable devices will continue to outsell PC’s and it’s just a matter of time before the big app developers/ CPU companies see the shiny new cash cow. These are my thoughts, no one can predict the future, except Marty Mcfly.

This is all truly amazing because the modern smart device has only been around for 5 years… 5 YEARS!! To clarify, a modern smart device is one that has a dominant capacitive touch screen input featuring the “App-dictates-all” concept. Digging deeper, the “App-dictates-all” concept means that the device is really just a blank canvas and it’s really the APPS turn it into WHATEVER the user wishes .It’s just starting, give it a chance. The hardware and, most importantly, the software will vastly improve from where it is today.On the topic of hardware, let's go back and look at the relative performances of PC's and desktops in more detail.

When we look at the full picture, we see that we

still have to be 3x the power of today’s smartphone to catch up to a 2007 PC’s

graphics card (PC CPU’s obviously aren’t good at floating point calculations).

Mid 2015 anyone? As for the Millions of Triangles Per Second (MTPS), I couldn’t

find any info on the Adreno 330 in the Snapdragon

800 but Qualcomm said it featured 2x the performance of the 320 which can

push 275 MTPS. With this in mind, 300+ MTPS is a safe bet.

The bottom line is that our 2013 smartphones can be readily compared to the Play Station 3 and Xbox 360... that idea alone blew me away. It must be noted that PC CPU's are still much more capable than mobile chipsets when it comes to multitasking due to hyper-threading technology and the like.

The bottom line is that our 2013 smartphones can be readily compared to the Play Station 3 and Xbox 360... that idea alone blew me away. It must be noted that PC CPU's are still much more capable than mobile chipsets when it comes to multitasking due to hyper-threading technology and the like.

I hate to be the one to predict the future and seem

a fool, but I’m fairly certain that the time will come when we have one device

that does it all. A device so small it fits in your pocket but has 5 times the

floating operation calculations and graphics performance of today’s PC’s. I’m

talking 2030 here, we still have to get over the problems of heat and power

consumption, but computers were once the size of rooms so hey, what’s the harm

in an open mind.

Next time you look at your phone, and you get frustrated when the GPS takes 10 seconds to find a lock, just remember that it’s going all the way to space and back! The computer that first sent the human race out there would probably take 6.24 hours to do the same thing at the very best!

Next time you look at your phone, and you get frustrated when the GPS takes 10 seconds to find a lock, just remember that it’s going all the way to space and back! The computer that first sent the human race out there would probably take 6.24 hours to do the same thing at the very best!

Enjoy the rest of your day!

-CF

Krazit, Tom (April 3, 2006). "ARMed

for the living room".CNet.com.